Abstract

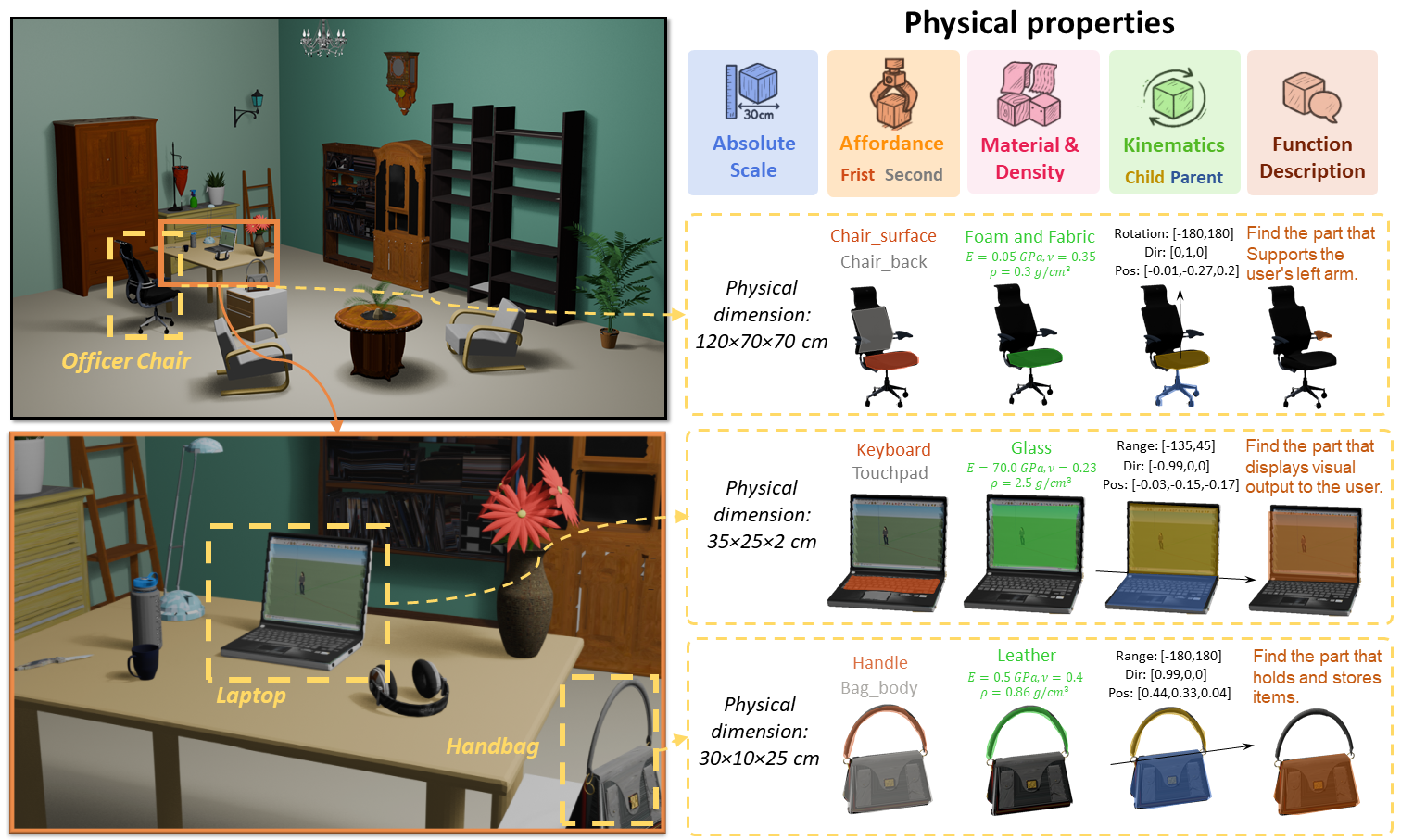

3D modeling is moving from virtual to physical. Existing 3D generation primarily emphasizes geometries and textures while neglecting physical-grounded modeling. Consequently, despite the rapid development of 3D generative models, the synthesized 3D assets often overlook rich and important physical properties, hampering their real-world application in physical domains like simulation and embodied AI. As an initial attempt to address this challenge, we propose PhysX-3D, an end-to-end paradigm for physical-grounded 3D asset generation. 1) To bridge the critical gap in physics-annotated 3D datasets, we present PhysXNet - the first physics-grounded 3D dataset systematically annotated across five foundational dimensions: absolute scale, material, affordance, kinematics, and function description. In particular, we devise a scalable human-in-the-loop annotation pipeline based on vision-language models, which enables efficient creation of physics-first assets from raw 3D assets. 2) Furthermore, we propose PhysXGen, a feed-forward framework for physics-grounded image-to-3D asset generation, injecting physical knowledge into the pre-trained 3D structural space. Specifically, PhysXGen employs a dual-branch architecture to explicitly model the latent correlations between 3D structures and physical properties, thereby producing 3D assets with plausible physical predictions while preserving the native geometry quality. Extensive experiments validate the superior performance and promising generalization capability of our framework. All the code, data, and models will be released to facilitate future research in generative physical AI.

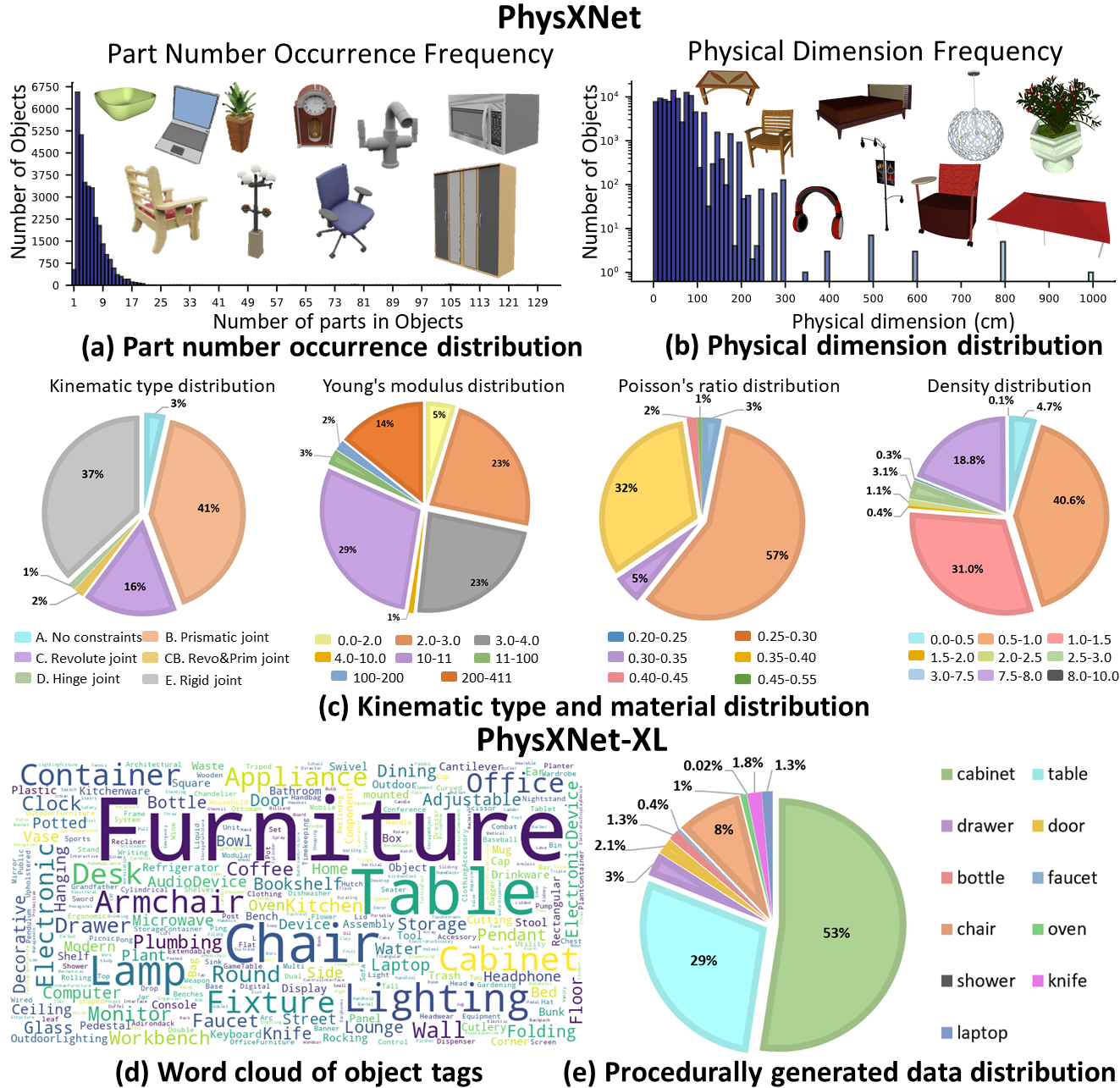

PhysXNet & PhysXNet-XL: Physical-Grounded 3D data

I. Statistics and Distribution

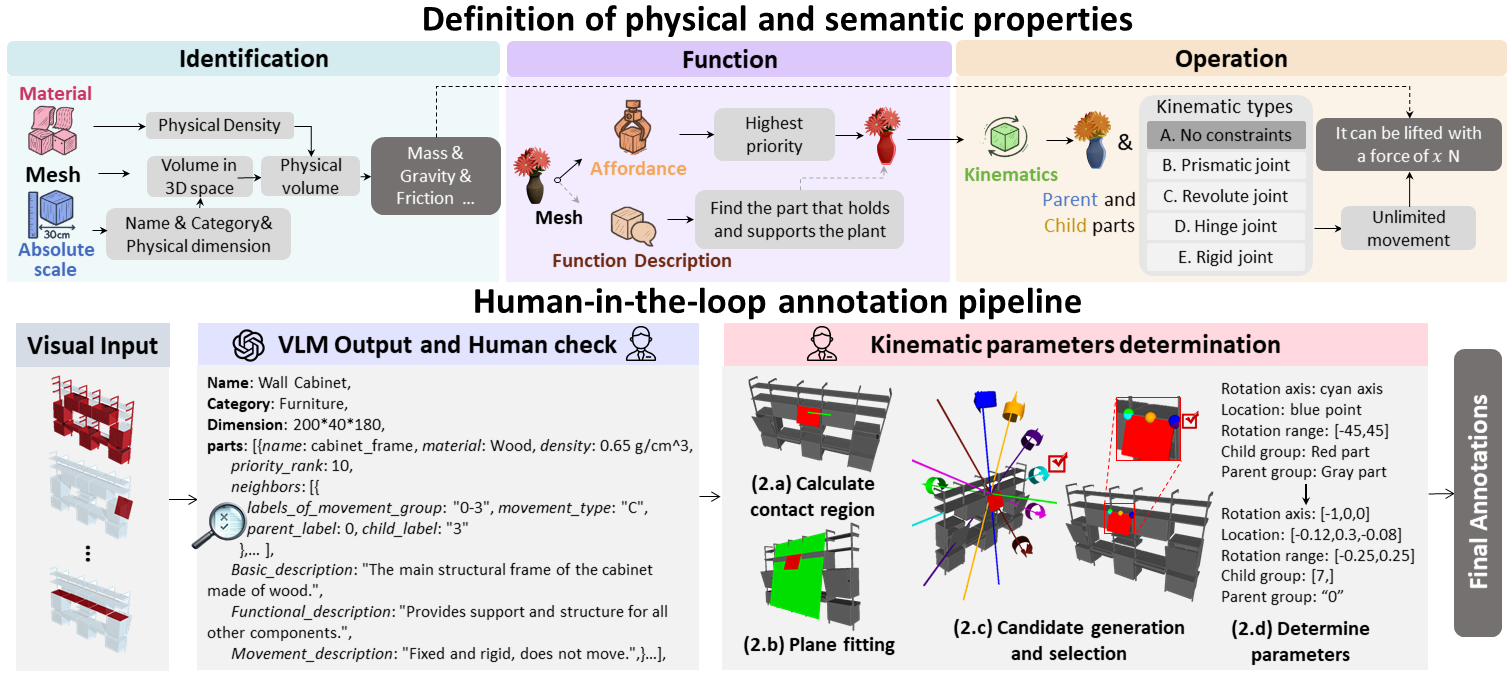

II. Definition of Properties and Our Annotation Pipeline.

Top: Definition of properties in PhysXNet . By defining and annotating properties across three categories, common physical quantities can be systematically calculated to enable physical simulations.

Bottom: Overview of our human-in-the-loop annotation pipeline. We utilize GPT-4o to gather foundational raw data, which is subsequently verified through human oversight. The kinematic parameters are then rigorously determined and finalized through human review

III. Supported Formats

1. JSON

2. URDF

3. XML

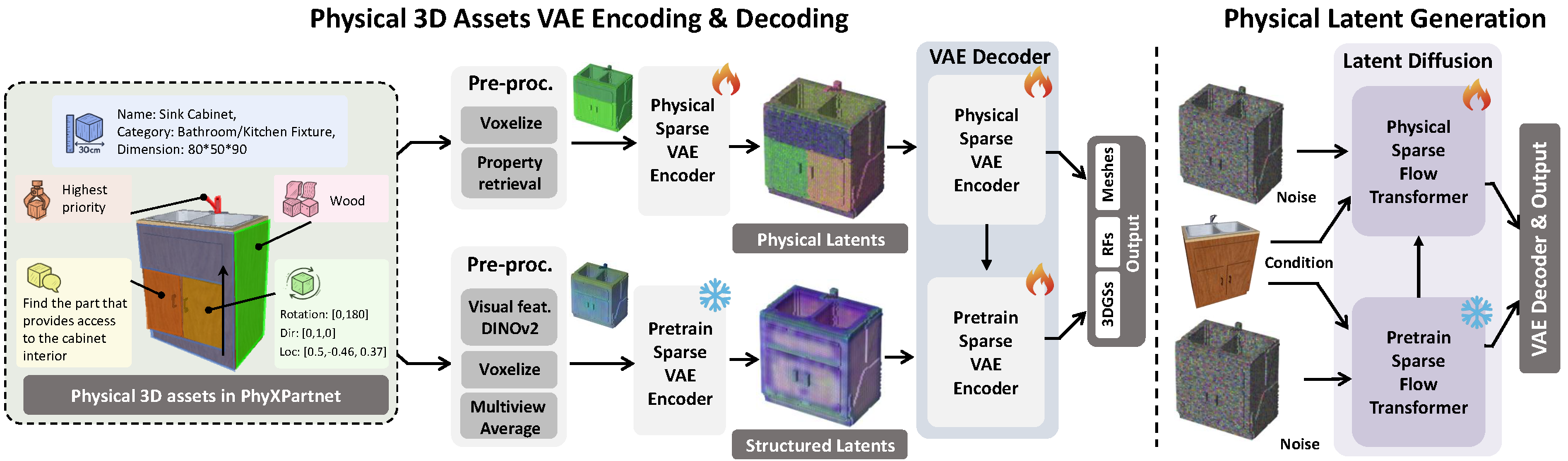

PhysXGen: Physical-Grounded 3D Generation Framework

I. Architecture of Our Method

PhysXGen features a two-stage architecture comprising: a physical 3D VAE framework for latent space learning, and a physics-aware generative process for structured latent.

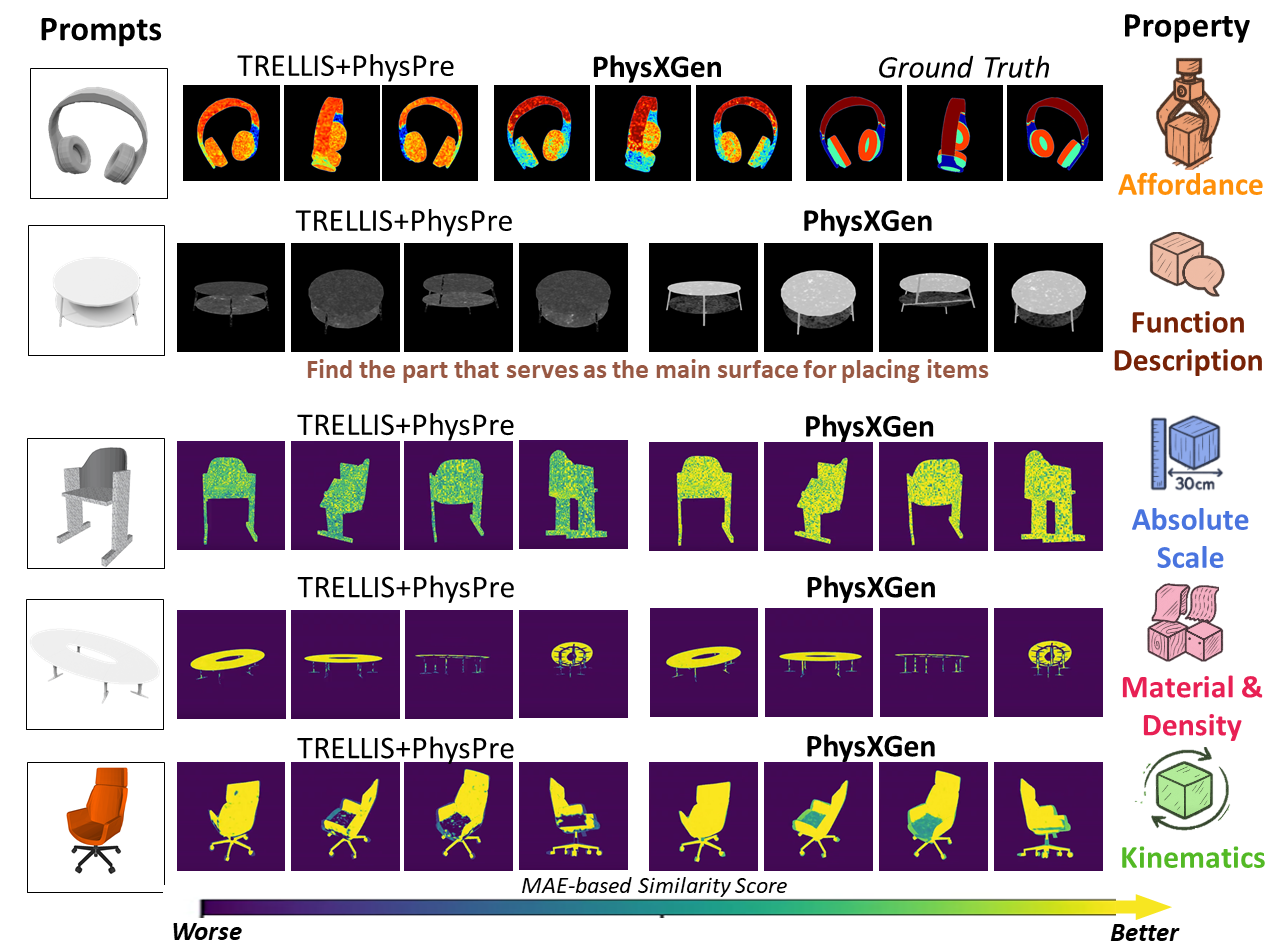

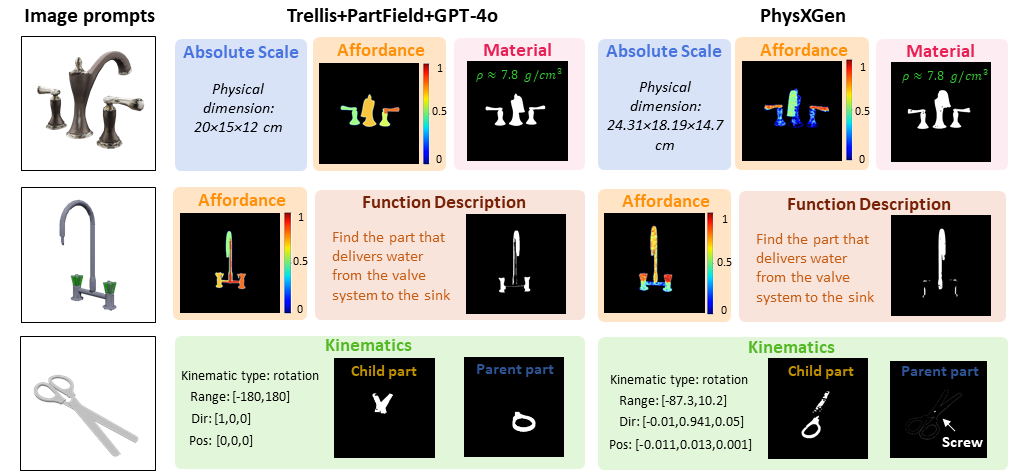

II. Qualitative Comparison

BibTeX

@article{cao2025physx,

title={PhysX-3D: Physical-Grounded 3D Asset Generation},

author={Cao, Ziang and Chen, Zhaoxi and Pan, Liang and Liu, Ziwei},

journal={arXiv preprint arXiv:2507.12465},

year={2025}

}